A few months back, I shared a guide on uploading Autopilot hardware hash files directly to blob storage from your target machines – here. Recently, I aimed to automate the rest of the process and found it quite the journey. While this might seem like overkill for collecting hardware hash files, it showcases some of Azure’s free or low-cost tools. I still believe that using an app registration is the easiest route for registering existing non-Intune managed devices with Autopilot. However, if you don’t have the option to use an app registration and need to register thousands of production machines, this post might be beneficial.

Not having much experience with Azure Functions or Logic Apps, I explored how to use these tools to merge the uploaded CSV files. I frequently came across blogs and articles suggesting using Data Factory for data merging and manipulation. I discovered a YouTube video that serves as a fantastic tutorial for this. Data Factory conveniently handles the initial task of merging the CSV hash files. I needed a way to receive the merged file (either to myself or the IT team). Initially, I considered using a Teams incoming webhook. However, since webhooks don’t allow attachments, I opted for the Teams email to channel feature. An extra advantage of sending to Teams is that the hash files can be stored in SharePoint, rather than a shared mailbox or individual mailbox.

I won’t rehash everything from my original post regarding the script for uploading hardware hash files to blob storage. I’ll assume you’ve read that and will continue from where it left off. The procedure remains mostly the same, except that you’ll create two storage containers instead of one; I’ll detail that below. In the end, you’ll have a blob storage account that collects hardware hash files from your machines, a Data Factory pipeline that merges all the hash files, and a Logic App that sends the merged file to your designated Teams channel. The files will be automatically deleted from the storage account once the Logic App is completed. Here’s everything in action from beginning to end:

Create Your Storage Account Containers

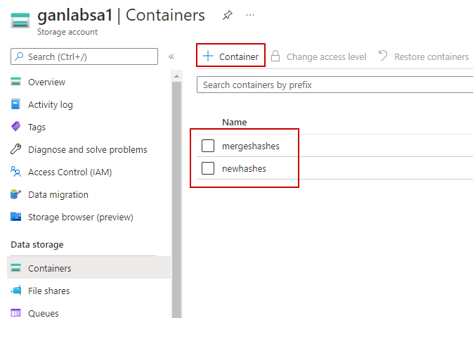

The process of creating the storage account is detailed in the previous post. However, for this setup, you will need two containers: one for incoming hash files and another for Data Factory’s destination for the merged CSV (I realized I had a typo in my mergedhashes container much later). Create your two containers as displayed below:

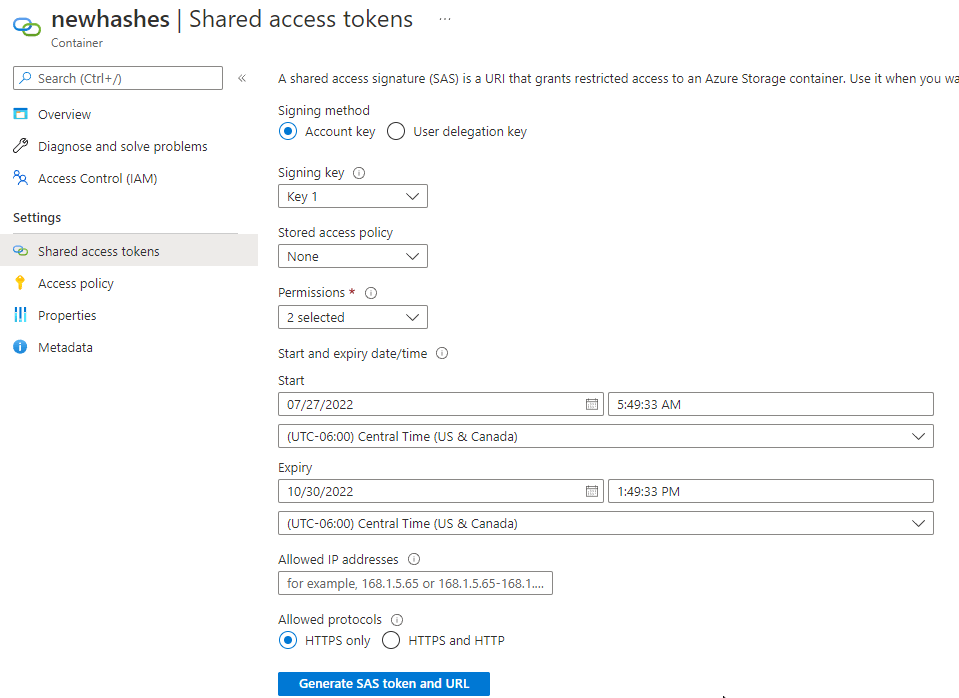

Next, generate a Blob SAS token with create and write permissions. You’ll need this for the script you’ll be running on client machines to upload the hash.csv files. Refer to the previous post for further details on this.

Once you have created your containers, you can start executing the script on client machines, and the hash.csv files will begin showing up in your container (don’t forget to include your SAS blob URL) – link to the script.

Create a New Data Factory

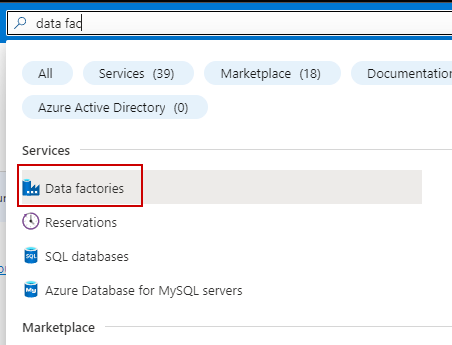

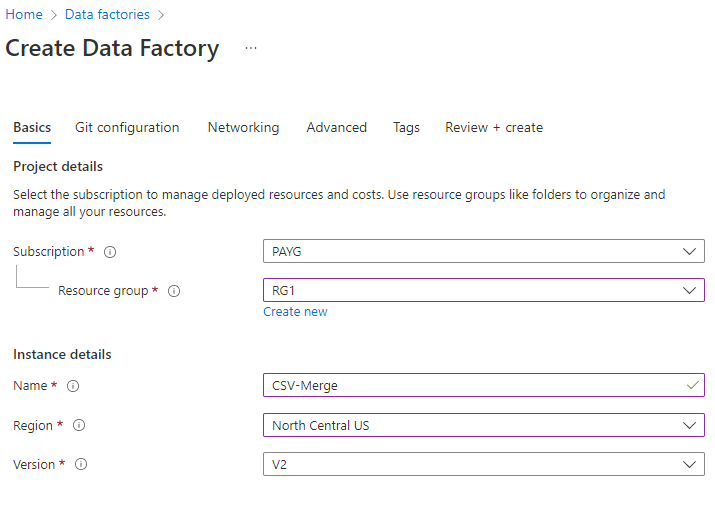

Data Factory is a powerful tool and can perform much more than just merging CSV files, but we will focus on this specific task. Look for Data Factories in Azure, and create a new one:

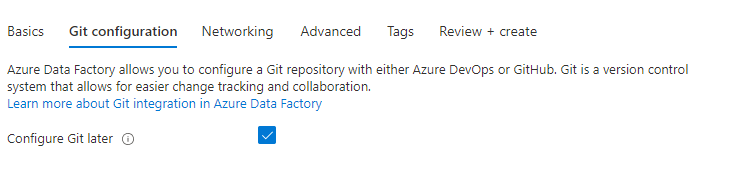

Configure GIT later and select the public endpoint option within the networking tab.

Leave a Reply